Navigating AI Regulation: A Smarter Way to Stay Compliant

AI is evolving fast, and with it comes a growing web of regulations, ethical considerations, and business risks. Whether you’re developing AI-powered products, integrating machine learning into your operations, or simply exploring automation, understanding the regulatory landscape is critical.

To help businesses assess their AI projects efficiently, I’ve put together a practical AI Regulation Checklist. It’s designed to help organisations:

✅ Identify potential compliance risks before AI deployment

✅ Ensure responsible AI governance with clear oversight and accountability

✅ Mitigate risks like data privacy breaches, algorithmic bias, and regulatory non-compliance

✅ Stay ahead of evolving AI laws and ethical best practices

This isn’t just about ticking boxes – it’s about future-proofing your AI strategy. If your team is working with AI, this checklist is a must-have to make sure your projects align with industry standards, legal requirements, and ethical AI principles.

Want access? Download the checklist now and take control of AI compliance.

Download the checklist

AI Regulation & Policies Information

AI Benefits vs. Risks

AI brings opportunities but also concerns like bias, hallucinations, cybersecurity threats, and copyright issues. Many companies only realise the full scope of risks as they scale AI adoption.

⚖ Strategic AI Investment

To maximise impact, businesses should carefully plan their AI strategy, assess alternatives, and stay agile to adapt as needed.

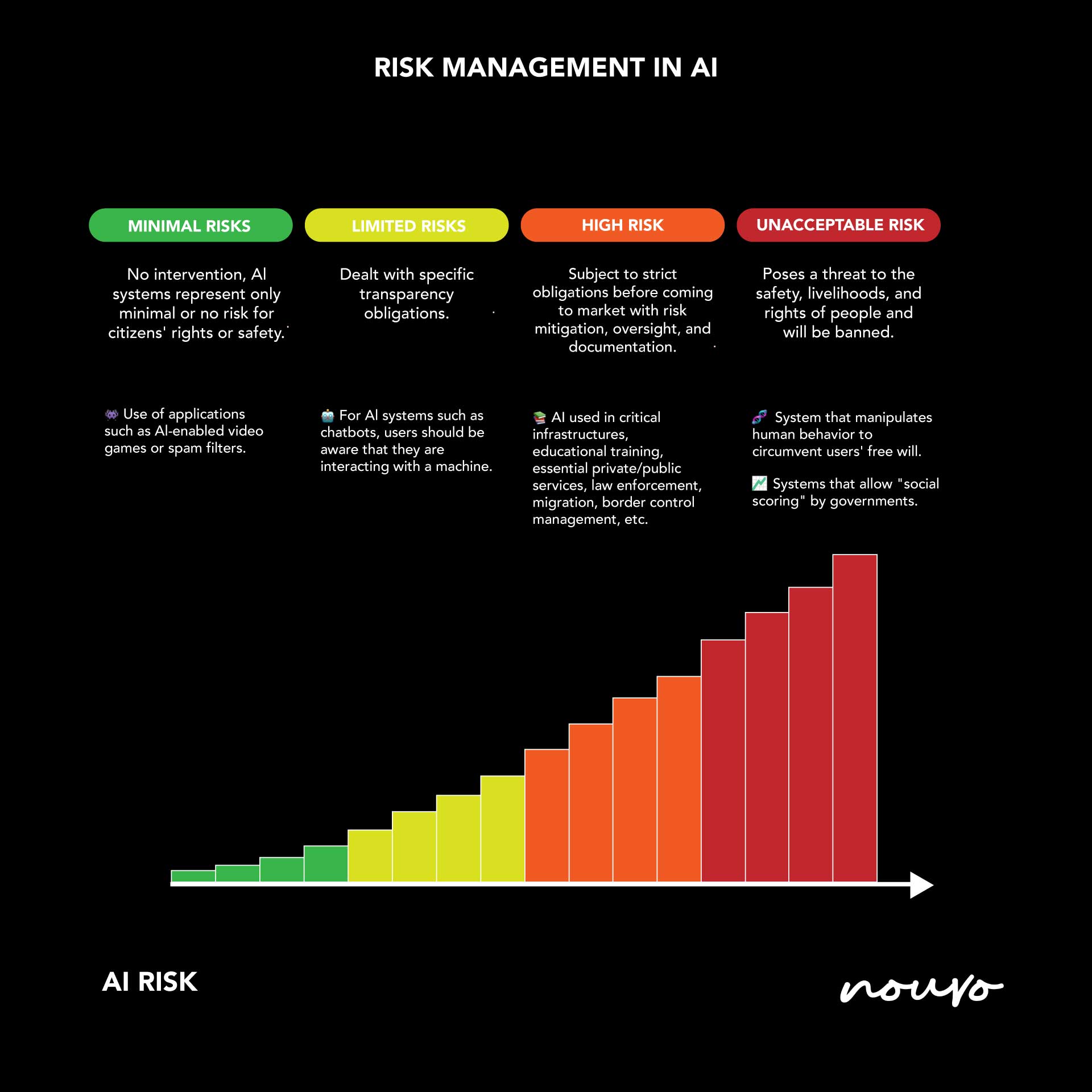

Risk Classification & Compliance

Companies use frameworks like the OECD, EU AI Act, and EO 13960 to structure AI risk management. Some create their own risk tiering (1-4 scale) to classify risks from unacceptable to minimal.

External Pressures & AI Adoption

Startups and competitors force businesses to adopt generative AI quickly, often for non-strategic but essential use cases. Thoughtful prioritisation is key to balancing risk, investment, and ROI.

Proactive Risk Management

- Regular testing & compliance checks are essential to avoid bias and legal issues.

- “Privacy by design” and ethical AI principles help maintain trust.

- AI vendors often integrate risk management, but companies must still continuously evaluate their AI systems.

Bottom Line

Trust and compliance are crucial in AI adoption. Companies must stay ahead of risks to maintain credibility and customer confidence in the AI-driven era.

Download our AI Regulation Canvas

By downloading this worksheet, you agree to receive marketing communications from Nouvo Digital. You can unsubscribe at any time.